Matt Weingarten is a Senior Data Engineer who writes about his work and perspectives on the data space on his Medium blog—go check it out!

Disclaimer

This is the continuation of a series of posts I will be doing in collaboration with Validio. These posts are by no means sponsored and all thoughts are still my own, using their whitepaper on a next-generation data quality platform (DQP for short) as a driver. This post or collaboration does not imply any vendor agreement between my employer and Validio.

Introduction

We’re in the final phase of what makes a next-generation DQP stand out: what enables them to be as successful as they can be. To summarize all the posts so far in this series, we have:

Part I: Why End-To-End Data Validation Is A Must-Have In Any Data Stack

Part II: Supporting A Wide Range Of Validation Rules

Part III: Everything You Need To Stop Worrying About Unknown Bad Data

Part IV: How Data Teams Should Get Notified About Bad Data

Part V: What Does It Really Take To Fix Bad Data?

Part VI: The Role Of System Performance & Scalability In Data Quality

We’ll now focus on collaboration and ways of working. How can we enable data quality to be a cross-functional activity?

Multi-User Support

Proper security in data engineering dictates the need for role-based access controls, and that’s no different when it comes to a DQP. Certain roles should have access to create reports and validations, while other users should just have read access. Likewise, users should only have edit access to their data sources and no others. These types of controls will prevent any unwanted issues and keep the system secure.

While we don’t have any example of this currently in our work, I could definitely see multi-user support being a feature in our future data quality tool. Stakeholders can add their own validations to the system that would in turn lead to a pull request on our repository of rules. If it gets reviewed and added to the system, that check will show up in future reports. That would definitely be the way to really make sure our product caters to our stakeholders.

Another point worth adding here is that data engineers don’t always know what constitutes “good data.” Often, that’s defined by business context, which is why the appropriate stakeholders need to be able to have the necessary visibility to see that for themselves. For example, we have a bunch of different patterns that we see with software identification (SWID) attributes, but what constitutes a valid SWID vs. invalid SWID is something that’d be defined by business.

Coupling With Data Pipeline Code

While we have spoken in previous posts about integrating DQPs with pipeline logic, that coupling should be minimal if possible. A tight coupling between pipeline code and data validation makes it difficult to get an entire overview of validation across the data stack. Furthermore, tight coupling makes it difficult to collaborate on data quality, which is something that should be encouraged as much as possible.

When the DQP is separated out from the pipeline code, the skillsets of a data engineer vs. those of a data consumer will not be misaligned. Both engineers and stakeholders alike can add whatever validations are necessary to catch data quality failures with a loose coupling in place.

Not having proper context when it comes to a division of responsibilities, which is often the result of coupling, can be frustrating. As a data producer, we get plenty of messages from various consumer teams about issues they see, but without having the proper context of what exactly they’re seeing, it’s hard to debug those issues and get them resolved. We’ve opened up the communication better to make that more straightforward, but it’s still not perfect yet.

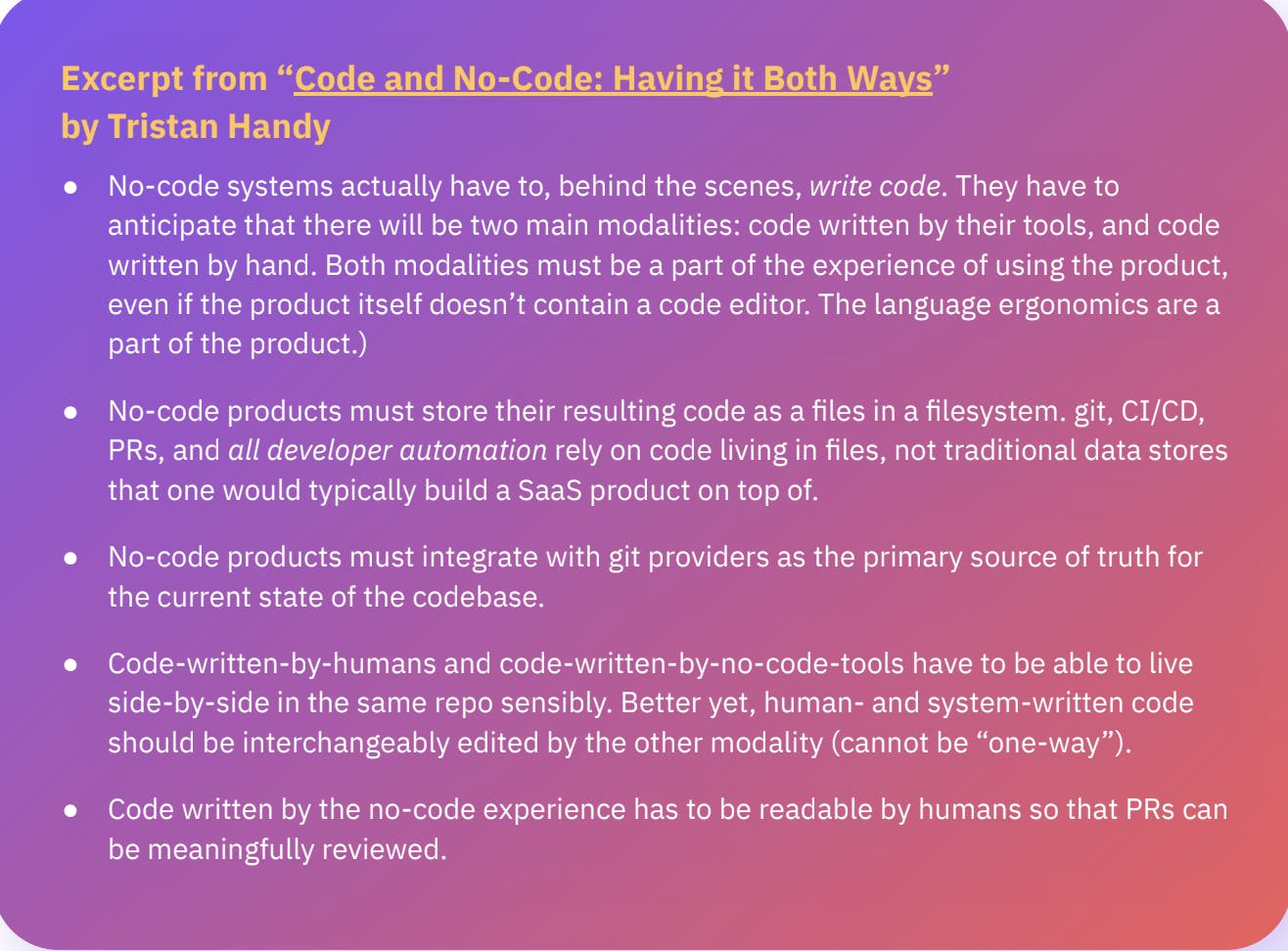

No-Code Interfaces

It’s become evident that data quality is not just an endeavor led by data engineers; it’s a cross-functional effort. Therefore, favoring no-code interfaces will be encouraged, so that more non-technical roles can be involved in the process. Tristan Handy does a good job of covering this in the following article, an excerpt of which can be seen below. At the same time, however, these DQPs need to still be able to provide versioning, templating, and the ability to make quick changes in bulk. This is difficult, but a technical challenge that should be overcome to allow the support for no-code interfaces.