6 min read

Data trust and transparency in the AI era.

Join the data-led companies that trust Validio with their data quality.

Discover

Easily profile, find and classify any data with LLMs for semantic search and agentic data profiling.

Configure

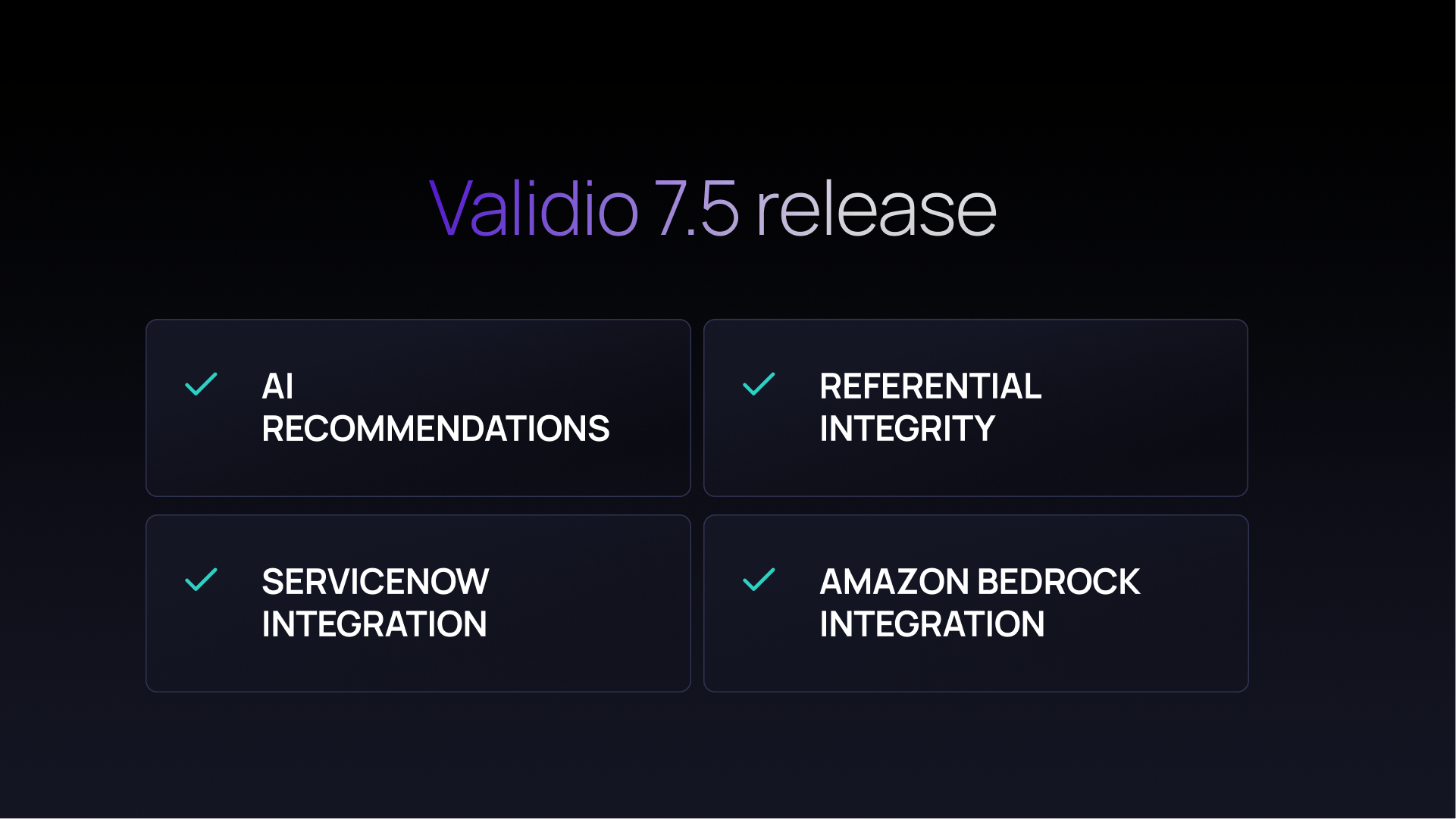

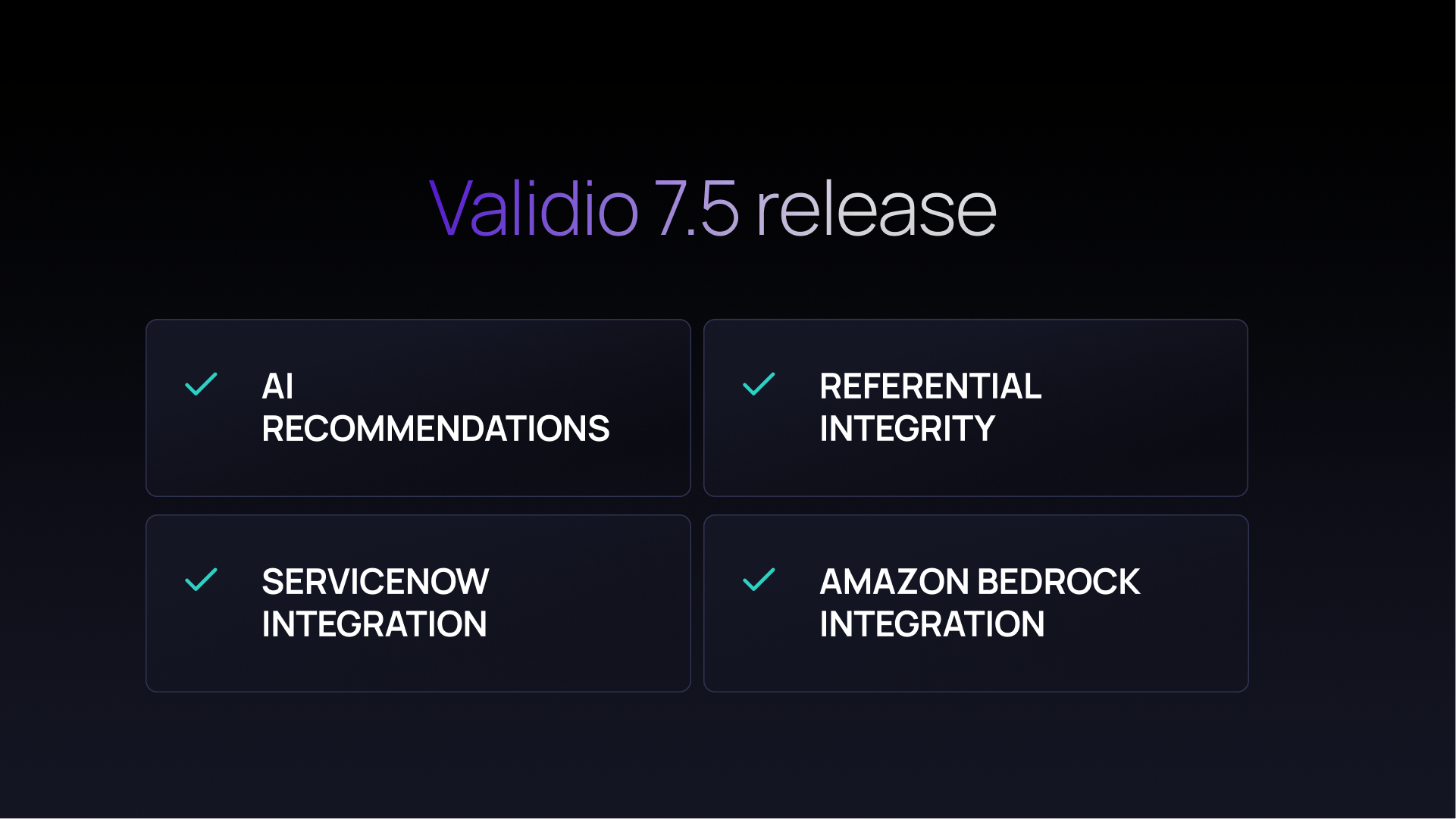

AI-assisted setup with automatic recommendations for instant time-to value and scalable data quality execution.

Monitor

AI-powered anomaly detection learning from historical data for industry leading precision and automated issue management.

Resolve

Understand not just what broke, but why, with agentic root-cause analysis and lineage to pinpoint issue origin and impact.

Guaranteed reliable data.

Everywhere you need it.

Automated anomaly detection

And if in some instance you have a tool we don’t work with - just ask and we’ll build it for you.

Validio is built with security and compliance as cornerstones, to help you stay on top of it.

Your data. Your control.

Validio optimizes metric queries and aggregation, minimizing raw data reads. No data is stored post-processing.

Fully compliant. For your safety and ours.

ISO 27001 & SOC 2 Certified: Our processes and application adhere to industry best practices.

We’ll host or you can. You decide.

Validio offers a fully self-hosted deployment option in customers Virtual Private Cloud (VPC).

Stay ahead of regulations

Regulations like the EU AI Act and BCBS 239 require data quality. We make sure your data is fit for it.

6 min read

12 min read

8 min read

Sleep well at night

knowing your data is right