Okay, so your organization has just decided to bring in a data quality and observability tool, that’s great! More effective decision making by increased data trust. Quicker time to detection and time saved with the help of nifty root cause analysis features. And perhaps best of all, less of “Are you sure these figures are right?” type of questions from disgruntled executives looking at your dashboard, or from some other data consumer consuming the data you are serving.

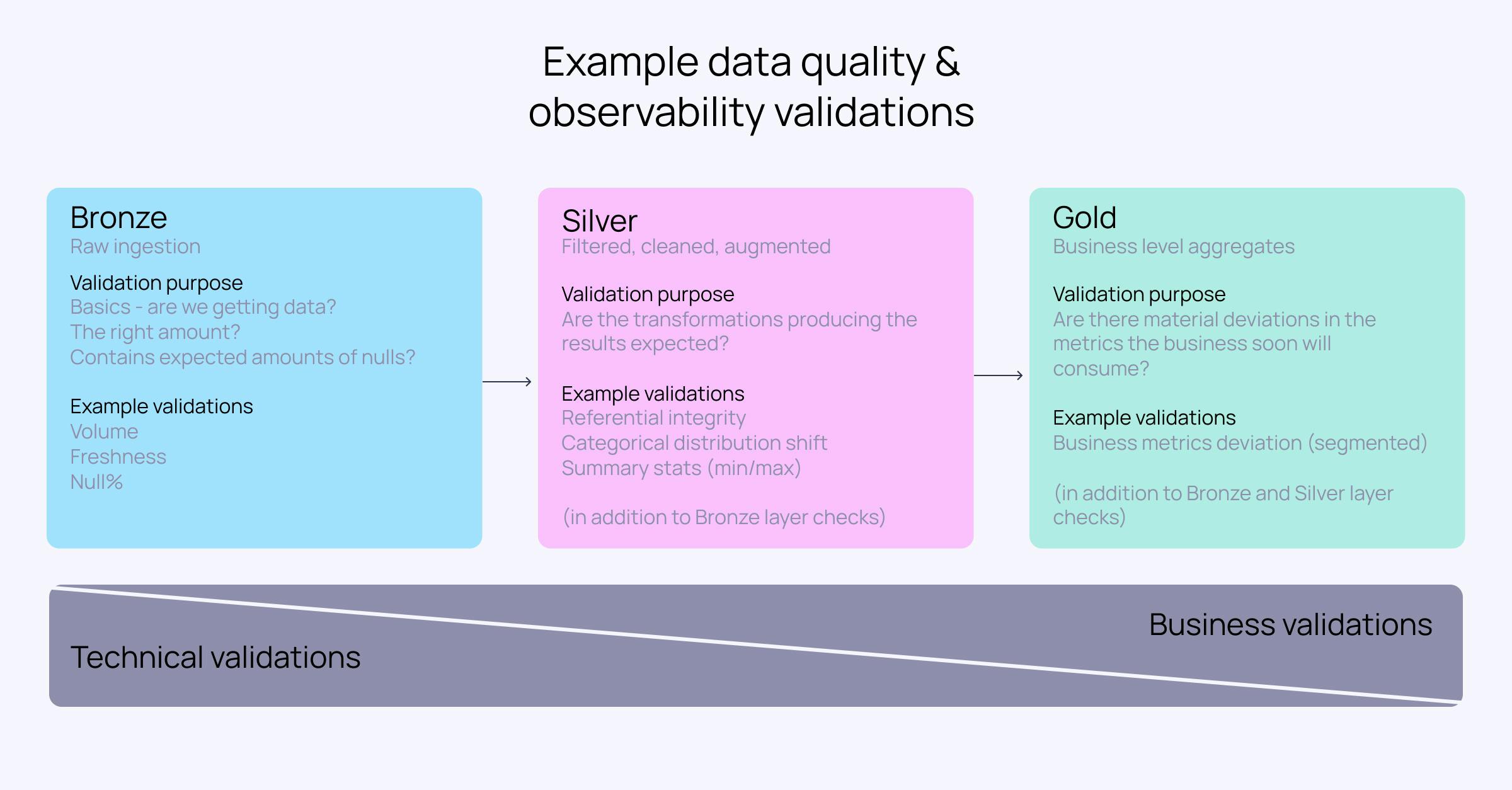

These are all promised outcomes by integrating a Data Quality and Data Observability tool to your data stack. However, it all rests on the premise that you have the right data validations in the right place. So where should you start? What rules/checks/monitors/validations* should you set-up first, and on which sources?

In this article we’ll discuss some best practices on how to effectively get started with the most meaningful validators, so you, your team and your organization can realize the promised outcomes as fast as possible.

*I work at Validio, a nameplay of ‘valid I/O’, you can guess which name we prefer :) Hence I’ll henceforth call it ‘validations’ in this article