What is MCP (and why should you care)?

MCP is an open protocol that solves the "N×M integration problem". Instead of building custom integrations between every AI tool and every data source, you implement the standard once and gain compatibility with any MCP-enabled application. In other words, your AI Assistant (Claude, I choose you!) can connect to an MCP server like the GitHub MCP servers and fetch data from your repos, analyse code or merge pull requests. Now this is great on its own, but the AI Assistant can obviously connect to multiple MCPs at the same time, moving data and insights between services intelligently.

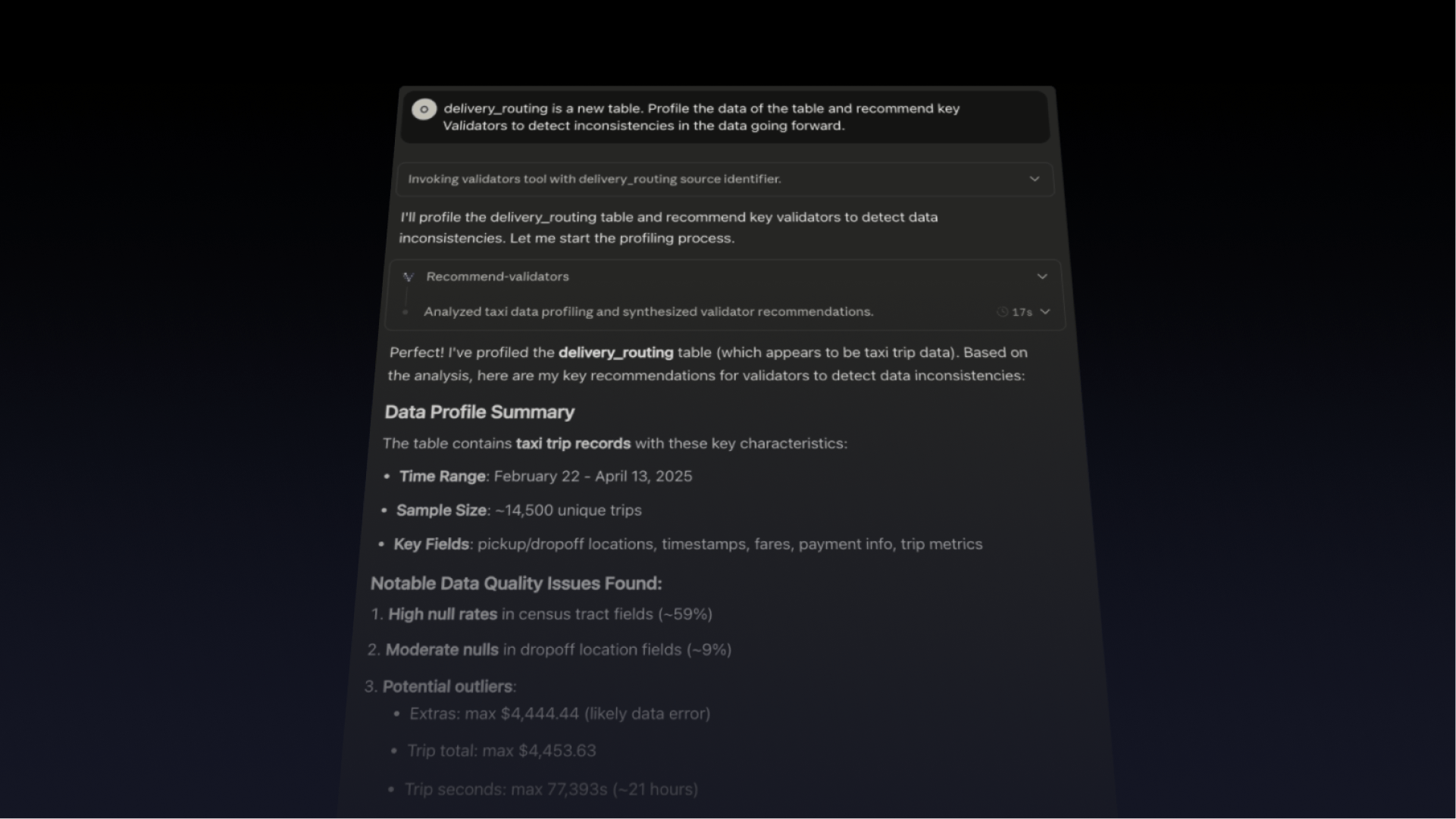

“Hey Claude, check if the latest incident in Validio was caused by an update to the data pipeline code” -> the AI Assistant will now query both Github MCP and Validio MCP and do its analysis. Hands off the keyboard for you.

The architecture that enables this is pretty simple: you need an MCP server that hosts the Tools (executable functions), Resources (structured data for instruction), and Prompts (reusable templates) and an MCP client (Cursor, Claude Code, Gemini-cli, etc). The MCP server for Validio is hosted by us, so all you need to do is connect to it with your favourite client.

Since Anthropic introduced MCP in November 2024, the ecosystem has exploded. GitHub, Stripe, Sentry, Atlassian, and dozens of others have built MCP servers. OpenAI, Google, and Microsoft have all adopted the protocol, and it's becoming the standard way AI assistants interact with external systems. For Validio users, this means more and more ways to combine data quality insights from Validio with your other systems in the organisation.